Decoding Deepfake and Synthetic Identity Fraud: Threats, Classifications, and What Fraud Leaders Need to Know

)

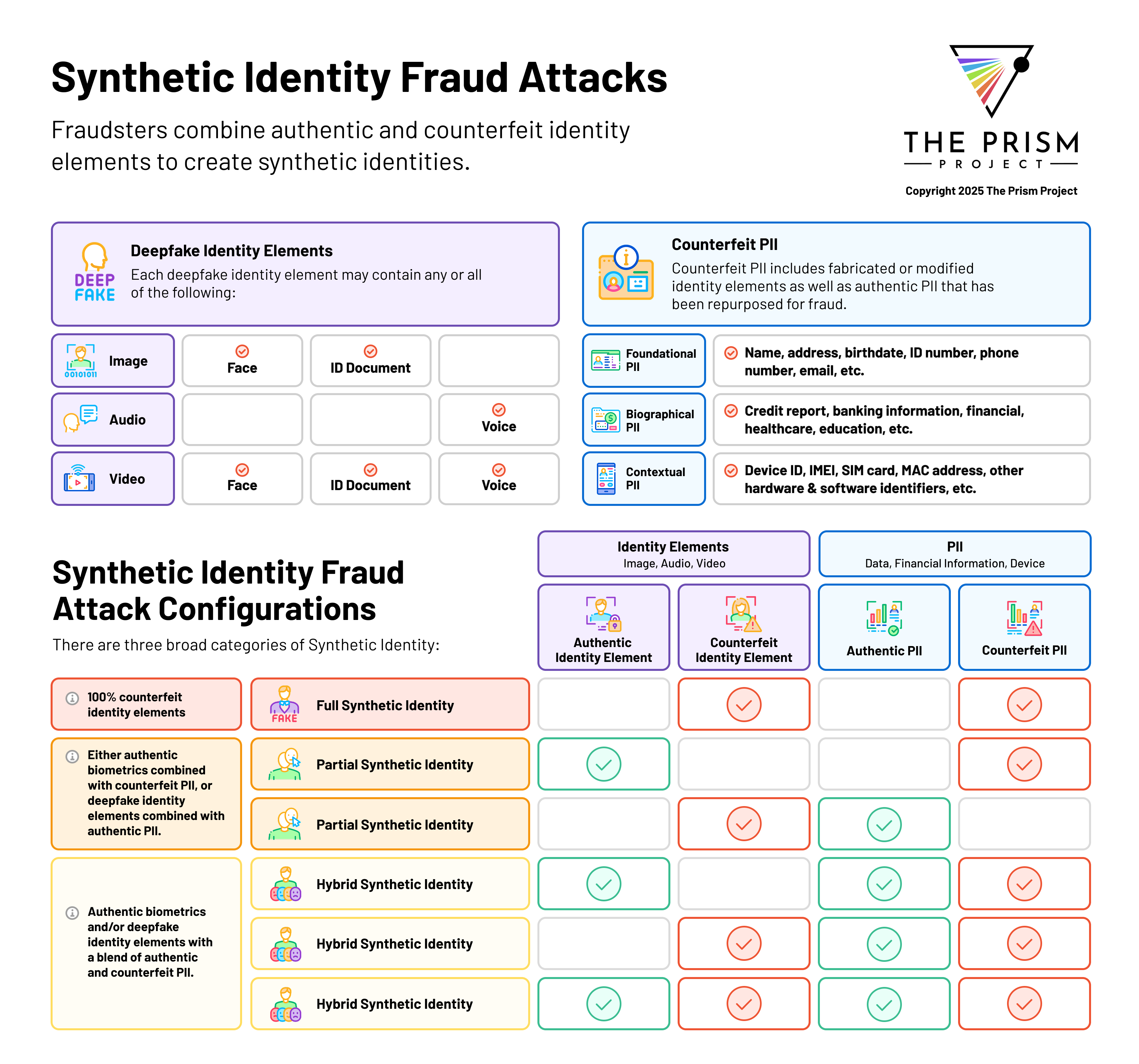

As generative AI reshapes the fraud landscape, The Deepfake and Synthetic Identity Prism Report provides a timely and in-depth framework to understand the multifaceted threats now facing fraud prevention professionals. Two of the report’s most valuable contributions are its comprehensive Synthetic Identity Cluster Classification and its detailed Deepfake and Synthetic Identity Threat Breakdown. These models offer critical insight into how fraudsters operate, and where organizations are most vulnerable.

Understanding Synthetic Identity: Ghosts in the Machine

Synthetic identities are digital Frankensteins—constructed identities that often blend real and fabricated components to create convincing fakes. According to the Prism Report, synthetic identities are not merely fake names or stolen credentials; they are structurally complex digital identities with varying degrees of authenticity and deception.

The report classifies synthetic identities into three distinct categories:

- Full Synthetic Identities: Entirely fabricated personas built from scratch using generative AI tools.

- Partial Synthetic Identities: Blend real and fake elements, such as real PII with a deepfake face.

- Hybrid Synthetic Identities: Composites of real and fake identity elements across biometric, document, and metadata layers.

Full Synthetic Identities

These are entirely fabricated personas built from scratch using generative AI tools. They may include:

- Deepfake biometric imagery

- Fake government-issued IDs

- Invented PII (e.g., addresses, SSNs, birthdates)

Because no real person is associated with these identities, they can bypass duplication checks and often appear unique to identity verification systems. These identities require specialized detection tools to uncover.

Partial Synthetic Identities

These identities blend real and fake elements, falling into two main types:

- Fake Face Partials: Real PII (e.g., name, date of birth) combined with deepfake facial imagery.

- Fake Data Partials: A real face or voice used in conjunction with fabricated PII.

Partial synthetics are especially insidious during onboarding because they often pass basic document or biometric checks—yet the data beneath is untrustworthy.

Hybrid Synthetic Identities

These are the most nuanced: composites of real and fake identity elements across biometric, document, and metadata layers. For example:

- Real face and PII with some fake biographical context

- Deepfakes layered over authentic identity fragments

Hybrids are often assembled over time and can evolve within databases, accumulating contextual history and even improving credit scores. By the time they're flagged, they’ve often already caused significant financial damage.

Breaking Down the Threat Landscape

The Deepfake and Synthetic Identity Threat Breakdown outlines how these identity forgeries are operationalized—targeting specific systems, channels, and verification stages. The report divides the attack vectors into three key categories:

- Automated Channel Threats: Exploiting systems that use cameras or microphones.

- Manual Channel Threats: Real-time deepfake video or voice used against human agents.

- Host System Threats: Backend manipulation of identity databases or template injections.

Automated Channel Threats

These threats exploit automated IDV (Identity Verification) and authentication systems that rely on edge device sensors, such as cameras or microphones:

- Presentation Attacks: Fraudsters use deepfake imagery or video to fool biometric sensors during live capture.

- Injection Attacks: Deepfakes are inserted directly into the signal processing pipeline—bypassing capture mechanisms.

- High-Velocity Attacks: Synthetic identities are submitted at scale, often with small variations, to overwhelm or confuse systems.

Document-based attacks are also common here:

- Counterfeit Document Presentation: AI-generated or doctored ID images shown to the system.

- Counterfeit Document Injection: Bypassing live capture by submitting manipulated data directly.

Manual Channel Threats

When identity checks are conducted by human agents (e.g., video interviews or live chat onboarding), attackers use:

- Live Deepfake Attacks: Real-time deepfake videos and voices presented to human reviewers. These are increasingly difficult to detect, particularly in high-volume call center environments.

Host System Threats

These more advanced threats target the backend infrastructure of the IDV system:

- Reference Data Attacks: Injecting falsified data into reference databases or exploiting outdated/unclean data.

- Template Injection: Inserting fraudulent biometric templates into systems used for future authentication.

Why This Matters

Unlike traditional fraud attempts that operate at the perimeter, synthetic identities gain entry by passing as legitimate users. Once inside, they operate undetected—opening accounts, applying for loans, and accumulating privileges like real customers.

This insider-like behavior makes synthetic identity fraud particularly damaging. According to Experian’s 2024 Global Fraud Report, synthetic identity attacks now account for up to 20% of credit charge-offs in the U.S., and the UK saw a 60% year-over-year increase in 2023–2024.

What Fraud Leaders Can Do:

Fraud leaders must adopt a layered, risk-aware, and identity-first approach to counter these threats. Key recommendations from the report include:

- Implementing advanced liveness detection for biometric capture

- Using document provenance tools and media authenticity standards (e.g., C2PA)

- Monitoring contextual identity signals like geolocation and device behavior

- Continuously auditing user databases for synthetic identity patterns

- Educating human reviewers to recognize indicators of real-time deepfake manipulation

Looking Ahead: The Roadmap for Identity Security

The Deepfake and Synthetic Identity Prism Report is both a warning and a guide. It urges organizations to take human identity seriously and invest in layered, biometric-centric defenses. As deepfakes and synthetic identities continue to evolve, only a holistic, collaborative approach—grounded in strong identity verification, continuous innovation, and market education—can secure the future of digital trust[1].

Maxine Most will be chairing the Fraud Leaders Summit in Miami on June 24, 2005, where these insights and more will be discussed in depth. This report is a foundational resource for all summit participants and the broader fraud prevention community.

Explore the full report for diagrams, vendor evaluations, and strategic guidance here! It’s a must-read for fraud professionals navigating the AI-powered future of digital identity.